In March 2022, the Department for Education (DfE) updated the pupil premium guidance for the start of the academic year 2022 – 23, requiring schools to select from a ‘menu of approaches’ for funding targeted academic support (DFE, 2022a). Within the targeted academic support, the following interventions can be used:

The flagship government initiative for supporting learners impacted by the COVID pandemic involved the creation of the National Tutoring Programme (NTP) as the main catch-up strategy, with the government white paper (DfE, 2022b) stating “we will deliver up to 6 million tutoring courses, each providing 15 hours of tutoring, by 2024”. This targeted strategy is not a cost effective and sustainable model due to a range of challenges, which we will not go into here as they are well documented. The fundamental problem is the funding, with the subsidies reducing to 60% in the academic year 2022-23 and then reducing to 25% for the academic year 2023-24 (DfE, 2022c). Even before the recent unprecedented financial pressures in schools due to inflation, gas and electricity prices and the increase in pay awards, future funding of the NTP would still be an issue.

Why peer tutoring?

• Interventions to support language development, literacy, and numeracy

• Activity and resources to meet the specific needs of disadvantaged pupils with SEND

• Deployment of teaching assistants and interventions

• One to one and small group tuition

• Peer tutoring

The flagship government initiative for supporting learners impacted by the COVID pandemic involved the creation of the National Tutoring Programme (NTP) as the main catch-up strategy, with the government white paper (DfE, 2022b) stating “we will deliver up to 6 million tutoring courses, each providing 15 hours of tutoring, by 2024”. This targeted strategy is not a cost effective and sustainable model due to a range of challenges, which we will not go into here as they are well documented. The fundamental problem is the funding, with the subsidies reducing to 60% in the academic year 2022-23 and then reducing to 25% for the academic year 2023-24 (DfE, 2022c). Even before the recent unprecedented financial pressures in schools due to inflation, gas and electricity prices and the increase in pay awards, future funding of the NTP would still be an issue.

Why peer tutoring?

At WhatWorked we decided to focus on developing the evidence base for a strategy not widely used in schools. A rapid review of published pupil premium strategy statements for primary and secondary schools in three local authorities confirmed only 1% used or planned to use peer tutoring. The low uptake is surprising as the Education Endowment Foundation (EEF) Teaching and Learning Toolkit has highlighted this approach as a high impact, low-cost intervention that is based on extensive evidence for the last decade (EEF, 2022). The uptake of the intervention had not changed from a survey by the Sutton Trust in 2015 finding only 1% of schools using peer tutoring strategies (Sutton Trust, 2015).

Therefore, we decided to start developing our concept for a WhatWorked evidence base focusing on KS2 Mathematics cross-age peer tutoring programmes for schools. For the success of any intervention, implementation is key and this is highlighted by the EEF guidance report “Putting Evidence to Work – A School’s Guidance to Implementation (EEF, 2021). At WhatWorked, we developed a bespoke delivery programme to provide an overview of the research evidence, step by step guidance for implementing the programme, the resources and training materials and the option to evaluate the intervention using three types of strategies. Teachers were allowed the flexibility to use either teacher observations (1 Star), a pre- post test single group design (2 Star) or a mini-randomised controlled trial (3 Star).

For the 2 Star and 3 Star evaluations, teachers are provided a protocol outlining the rationale, research design and planned data analysis. We also require teachers to register in advance of starting the intervention, otherwise we are unable to complete their analysis. For the initial pilot of the Year 3 / Year 5 cross-age peer tutoring programme for multiplication and division, 30 teachers had enrolled in the programme with 9 pre-registering their evaluation in advance. Please note, we do not require schools who decide to use the teacher observation to register as this approach does not involve any data to be analysed. Three schools selected the mini-RCT and six schools planned to use the pre- post test single group design. For schools selecting the mini-RCT, the design of the evaluation allowed the control students to receive the intervention after the post-test to ensure no learners are disadvantaged by not having access to the support.

COVID and a heat wave

For the 2 Star and 3 Star evaluations, teachers are provided a protocol outlining the rationale, research design and planned data analysis. We also require teachers to register in advance of starting the intervention, otherwise we are unable to complete their analysis. For the initial pilot of the Year 3 / Year 5 cross-age peer tutoring programme for multiplication and division, 30 teachers had enrolled in the programme with 9 pre-registering their evaluation in advance. Please note, we do not require schools who decide to use the teacher observation to register as this approach does not involve any data to be analysed. Three schools selected the mini-RCT and six schools planned to use the pre- post test single group design. For schools selecting the mini-RCT, the design of the evaluation allowed the control students to receive the intervention after the post-test to ensure no learners are disadvantaged by not having access to the support.

COVID and a heat wave

At the start of the summer term 2, we had optimistically hoped schools would have less disruption from COVID but as we have all found out over the last two years of the pandemic, the virus can return with even more highly transmissible versions and create major disruption in schools. From the initial nine schools, within two weeks six schools had postponed the delivery of the programme to the Autumn term due to staff and pupil absences. Furthermore, a heat wave in the last week of term prevented a school from delivering the final lesson and post-test. From the original nine schools, we had one school complete the mini-RCT evaluation and another the pre-post test single group evaluation.

Even though we have encountered challenges, the feedback from schools has been positive and we have proven that the online delivery model for supporting schools to plan, implement and evaluate an intervention worked. Furthermore, the average time for a teacher to complete the online course to set up the intervention in their school was just under one hour (48 minutes).

Developing an evidence base

Developing an evidence base

The main advantage we have at WhatWorked is the use of an innovative approach to evidence generation, as we aggregate the data from the mini-RCTs to create a cumulative meta-analysis for each intervention. Therefore, even though our initial pilots had been impacted due to the disruption of COVID and an extreme heat wave, we are able to start the evidence base with data from a small number of schools. As more schools replicate, implement and evaluate the intervention in the next academic year, we will be able to update the overall effective size as the sample size increases. So, how do we analyse the data at WhatWorked?

Data analysis

Data analysis

The analysis we use shows the amount students improved compared to their starting point (Gain). If a control group is used it will, more informatively, show how much students improved compared to similar students not benefiting from the intervention, similar to asking ‘what would have happened if the students had not received the peer tutoring?

Our analysis reports the size of the impact (Effect Size) using a technique standard for education research. This shows the size of effect relative to ‘noise’ in the data. Effect size provides a number on the same scale as other educational interventions, allowing easy comparison of the effectiveness of different interventions.

Data is statistically analysed using techniques at the standard required for publication in peer reviewed research journals. Analysis of Variance (ANOVA) is used to show whether natural ‘noise’ in the data is a more likely explanation for results than the intervention.

To get a clear picture of the amount the intervention improves attainment, it is regarded as best practice to ‘filter- out’ differences between intervention and control students, for example one group is often slightly higher performing. Where a control group is used, Analysis of Covariance (ANCOVA) will adjust the Effect Size for differences between groups at baseline. In line with high quality reporting requirements of scientific publications, results are adjusted for distortion that occurs with small numbers of students. Furthermore, intention to treat will be used in all WhatWorked impact evaluations.

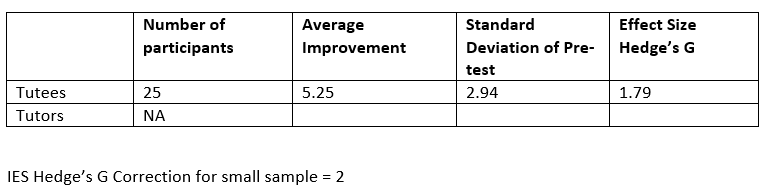

How effective were the peer tutoring pilots?

The initial pilot focused on a Year 3 / Year 5 cross-age peer tutoring programme for the topic multiplication and division.

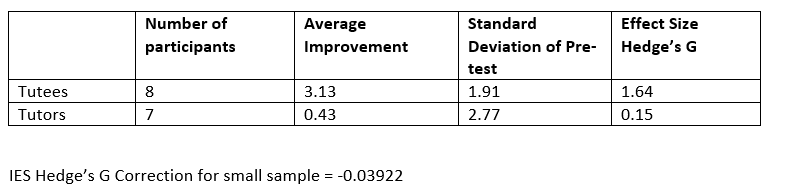

Pre- Post Test Single Group Design

The research design for the pre- post-test evaluation does not include a comparator group, therefore the effect size of the intervention should not be compared directly with the mini randomised controlled trials. However, the pre- post-test design does allow for the gained score to be calculated to show the impact of the intervention.

The initial intervention sample size is n=10 for the tutees and tutors. For the post assessment, 2 tutees and 3 tutors were absent. The analysis sample size for the school is n= 8 tutees and n= 7 tutors.